Can you hear me? The primary task for every voice first interface is the same; access to acoustic information.

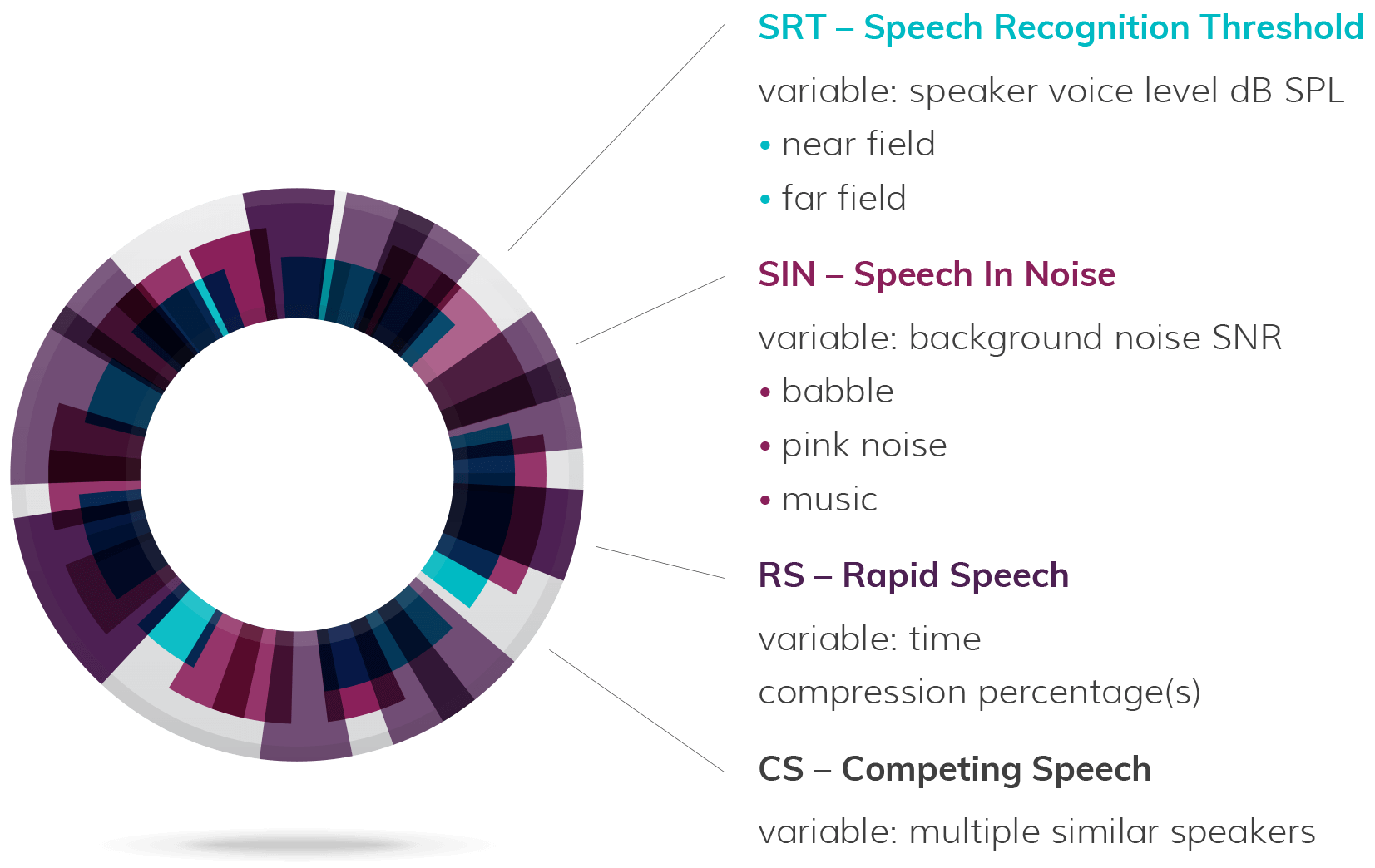

ASR Audiogram

We have leveraged decades of experience from the field of Audiology and created a software suite designed to evaluate the hearing capabilities of AI powered virtual assistants such as Alexa, Siri, Cortana, Bixby and Google Assistant. We apply our protocols across spoken language variables such as volume level, background noise, distance and cadence. This creates a detailed description of the virtual assistant hearing ability.

Inclusivity Index

Once we benchmark the device with our ASR Audiogram, we then evaluate the same hearing capabilities across natural language variations associated with gender, age and ethnicity. This multi-layered approach creates our inclusivity index and helps to identify potential gaps in performance.

Black-box Evaluations

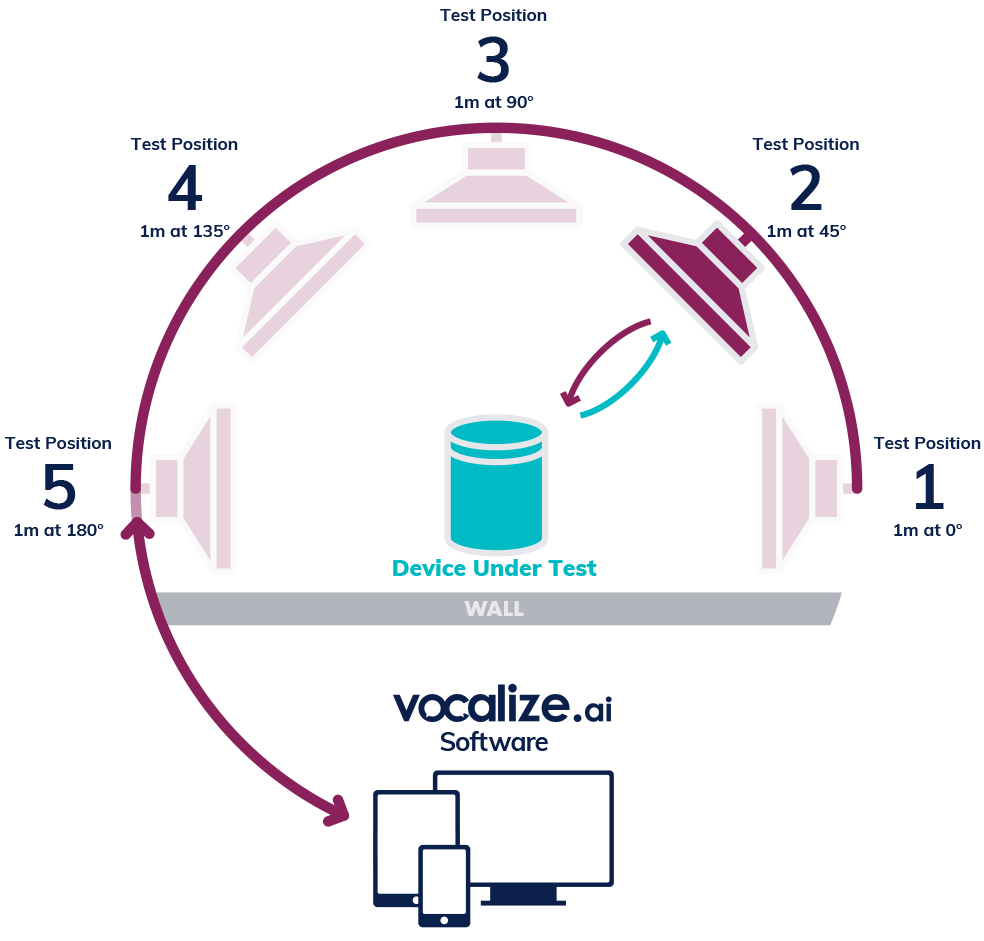

Do you want to know how well your product stacks up against your competitors? Do you want to know if that last group of software changes really improved performance?  Our system is designed to support true black-box evaluations which closely mirror real world performance and real human expectations. This enables us to evaluate any voice-first product with any virtual assistant, in any formfactor, in any environment and with any language.

Our system is designed to support true black-box evaluations which closely mirror real world performance and real human expectations. This enables us to evaluate any voice-first product with any virtual assistant, in any formfactor, in any environment and with any language.

Our System

Leveraging human performance benchmarks, we have created a system designed to answer the fundamental voice interface questions: Can you hear me? Can you understand me?

Secondary goals include making sure the system is standards based, time efficient and cost effective (better, faster, cheaper).

Secondary goals include making sure the system is standards based, time efficient and cost effective (better, faster, cheaper).

Can you hear me?

The primary task for any voice first interface is access to acoustic information. To assess this capability, we rely of the field of audiology which has been studying and evaluating human hearing for decades. Several of our protocols (e.g. SRT, SIN) are modeled directly after the same procedures used to test humans. This provides a reliable, repeatable standard for evaluating and tracking virtual assistant performance as the technology matures. This work is ongoing, but large sections have been completed in 2018. Partial results are available for review on this website and these services are immediately available for interested partners.

Can you understand me?

Hearing is the first step and it is naturally followed by understanding/comprehension. We define this step as, “The correct interpretation of acoustic and linguistic information.” We will be designing these protocols throughout 2019. More information to come soon.